What is AI?

Artificial Intelligence

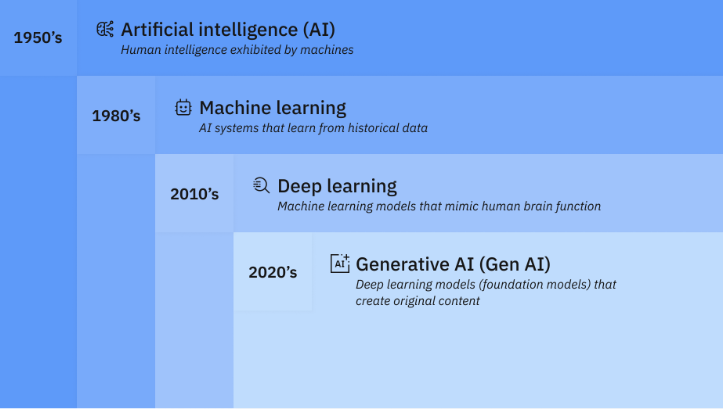

Artificial intelligence (AI) is the technology that enable computers and machines to simulate human intelligence processes such as human learning, comprehension, problem solving, decision making, creativity and autonomy.

How AI

History of AI

Throughout the centuries, thinkers from the Greek philosopher Aristotle to the 13th-century Spanish theologian Ramon Llull to mathematician René Descartes and statistician Thomas Bayes used the tools and logic of their times to describe human thought processes as symbols. Their work laid the foundation for AI concepts such as general knowledge representation and logical reasoning.

The late 19th and early 20th centuries brought forth foundational work that would give rise to the modern computer. In 1836, Cambridge University mathematician Charles Babbage and Augusta Ada King, Countess of Lovelace, invented the first design for a programmable machine, known as the Analytical Engine. Babbage outlined the design for the first mechanical computer, while Lovelace — often considered the first computer programmer — foresaw the machine’s capability to go beyond simple calculations to perform any operation that could be described algorithmically.

As the 20th century progressed, key developments in computing shaped the field that would become AI. In the 1930s, British mathematician and World War II codebreaker Alan Turing introduced the concept of a universal machine that could simulate any other machine. His theories were crucial to the development of digital computers and, eventually, AI.

1940s

Princeton mathematician John Von Neumann conceived the architecture for the stored-program computer — the idea that a computer’s program and the data it processes can be kept in the computer’s memory. Warren McCulloch and Walter Pitts proposed a mathematical model of artificial neurons, laying the foundation for neural networks and other future AI developments.

1950s

With the advent of modern computers, scientists began to test their ideas about machine intelligence. In 1950, Turing devised a method for determining whether a computer has intelligence, which he called the imitation game but has become more commonly known as the Turing test. This test evaluates a computer’s ability to convince interrogators that its responses to their questions were made by a human being.

The modern field of AI is widely cited as beginning in 1956 during a summer conference at Dartmouth College. Sponsored by the Defense Advanced Research Projects Agency, the conference was attended by 10 luminaries in the field, including AI pioneers Marvin Minsky, Oliver Selfridge and John McCarthy, who is credited with coining the term “artificial intelligence.” Also in attendance were Allen Newell, a computer scientist, and Herbert A. Simon, an economist, political scientist and cognitive psychologist.

The two presented their groundbreaking Logic Theorist, a computer program capable of proving certain mathematical theorems and often referred to as the first AI program. A year later, in 1957, Newell and Simon created the General Problem Solver algorithm that, despite failing to solve more complex problems, laid the foundations for developing more sophisticated cognitive architectures.

1960s

In the wake of the Dartmouth College conference, leaders in the fledgling field of AI predicted that human-created intelligence equivalent to the human brain was around the corner, attracting major government and industry support. Indeed, nearly 20 years of well-funded basic research generated significant advances in AI. McCarthy developed Lisp, a language originally designed for AI programming that is still used today. In the mid-1960s, MIT professor Joseph Weizenbaum developed Eliza, an early NLP program that laid the foundation for today’s chatbots.

1970s

In the 1970s, achieving AGI proved elusive, not imminent, due to limitations in computer processing and memory as well as the complexity of the problem. As a result, government and corporate support for AI research waned, leading to a fallow period lasting from 1974 to 1980 known as the first AI winter. During this time, the nascent field of AI saw a significant decline in funding and interest.

1980s

In the 1980s, research on deep learning techniques and industry adoption of Edward Feigenbaum’s expert systems sparked a new wave of AI enthusiasm. Expert systems, which use rule-based programs to mimic human experts’ decision-making, were applied to tasks such as financial analysis and clinical diagnosis. However, because these systems remained costly and limited in their capabilities, AI’s resurgence was short-lived, followed by another collapse of government funding and industry support. This period of reduced interest and investment, known as the second AI winter, lasted until the mid-1990s.

1990s

Increases in computational power and an explosion of data sparked an AI renaissance in the mid- to late 1990s, setting the stage for the remarkable advances in AI we see today. The combination of big data and increased computational power propelled breakthroughs in NLP, computer vision, robotics, machine learning and deep learning. A notable milestone occurred in 1997, when Deep Blue defeated Kasparov, becoming the first computer program to beat a world chess champion.

2000s

Further advances in machine learning, deep learning, NLP, speech recognition and computer vision gave rise to products and services that have shaped the way we live today. Major developments include the 2000 launch of Google’s search engine and the 2001 launch of Amazon’s recommendation engine.

Also in the 2000s, Netflix developed its movie recommendation system, Facebook introduced its facial recognition system and Microsoft launched its speech recognition system for transcribing audio. IBM launched its Watson question-answering system, and Google started its self-driving car initiative, Waymo.

2010s

The decade between 2010 and 2020 saw a steady stream of AI developments. These include the launch of Apple’s Siri and Amazon’s Alexa voice assistants; IBM Watson’s victories on Jeopardy; the development of self-driving features for cars; and the implementation of AI-based systems that detect cancers with a high degree of accuracy. The first generative adversarial network was developed, and Google launched TensorFlow, an open source machine learning framework that is widely used in AI development.

A key milestone occurred in 2012 with the groundbreaking AlexNet, a convolutional neural network that significantly advanced the field of image recognition and popularized the use of GPUs for AI model training. In 2016, Google DeepMind’s AlphaGo model defeated world Go champion Lee Sedol, showcasing AI’s ability to master complex strategic games. The previous year saw the founding of research lab OpenAI, which would make important strides in the second half of that decade in reinforcement learning and NLP.

2020s

The current decade has so far been dominated by the advent of generative AI, which can produce new content based on a user’s prompt. These prompts often take the form of text, but they can also be images, videos, design blueprints, music or any other input that the AI system can process. Output content can range from essays to problem-solving explanations to realistic images based on pictures of a person.

In 2020, OpenAI released the third iteration of its GPT language model, but the technology did not reach widespread awareness until 2022. That year, the generative AI wave began with the launch of image generators Dall-E 2 and Midjourney in April and July, respectively. The excitement and hype reached full force with the general release of ChatGPT that November.

OpenAI’s competitors quickly responded to ChatGPT’s release by launching rival LLM chatbots, such as Anthropic’s Claude and Google’s Gemini. Audio and video generators such as ElevenLabs and Runway followed in 2023 and 2024.

Generative AI technology is still in its early stages, as evidenced by its ongoing tendency to hallucinate and the continuing search for practical, cost-effective applications. But regardless, these developments have brought AI into the public conversation in a new way, leading to both excitement and trepidation.